Getting started

When we started talking about building an MTA, there were a couple of items we knew had to be top of mind.

- The bright, creative people who need a powerful MTA also want access to the code so they can tweak it to their unique needs

It needed to have a better licensing plan than what is available in the market now - It needed to be faster and better than anything on the market today, or it would not be worth the effort.

The first item is easy - open-source the code. We have discussed this before and we will cover it again, but it is important to us to make sure we develop in the open and provide open access to the code for our users. The second item is also easy - eliminate the license fee entirely and find another way to earn income from the work. The third item is a bigger ask, and our collective 45+ years of experience with email told us it had to be secure, easy to implement and use, and above all, needed to be able to process at least a million messages per hour. But even that was not going to be enough to convince commercial MTA users to look at a new, open-source MTA. The target number had to be much, much faster, and that number had to start with a “B”.

Sending a BILLION messages a day

When Wez Furlong, the brilliant mind behind the KumoMTA code, started investigating the concept of creating a highly performant MTA, he leaned on his experience with Rust to get us the raw power we needed to hit at least the one-million-an-hour mark that is table stakes for this industry. We hit that out of the gate but wanted to ensure we could fully saturate any hardware we threw at it. Soon after, we had a production release that maximized resources and also included security and performance improvements to make deployment very easy.

The rest of this post details some performance metrics that will be important in planning a production deployment. The TL;DR here is that when you tune for peak performance, your bottleneck will probably be the network adapter. A single KumoMTA server with 96 cores will easily process 60 Million messages per hour if you redirect the messages to dev/null. In the real world, we need to deal with network adapters, Internet response lag, and the joys of SMTP handshakes. These all impact real-world performance, but configuring a server to maintain 7 Million messages per hour and clustering 7 of them together gets you a throughput of 1 Billion+ per day.

Breaking stuff

My job here is to be customer-zero; I break stuff and then figure out how to prevent that breakage from happening in a customer environment. In the course of writing this post, I have built and broken about 50 AWS and Azure server instances, so you don't have to. You’re welcome.

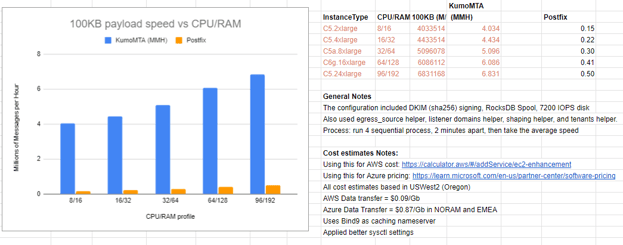

The graph below shows the results of the testing of KumoMTA at high volume compared to the cost of operation in both AWS and Azure. I have also added Postfix speed testing values to add a sense of scale. Please note that the costs calculated here are approximate and based on the AWS and Azure documentation. YOUR MILEAGE MAY VARY.

Testing was done using the Traffic-Gen tool we included in the repo because we needed something that was fast enough to not break while testing. This tool generated 100,000 100KB messages and burst them over 2000 connections to simulate real-world SMTP injection. You could potentially gain some speed using the HTTP Inject API, but I will save that conversation for another day.

Looking at this graph, it seems that the sweet spot for a high volume sender is likely a 64 core, 128Gb AWS reserved instance at about $4400 a month. That value is based on 1 million messages an hour and 10 hours a day to provide delivery of 300 Million messages in a month at $0.014 CPM. If you add Gold support to that, it comes up to $0.025 CPM. Keep in mind that the numbers here assume you are deploying to public cloud. If you colocate bare-metal servers, you can likely reduce that significantly.

Now if you really need to hit that 1 billion a day mark, you can install on seven (7) of the 96CPU instances and run injection in parallel to hit 1.1Billion+ over 24 hours. To put that into perspective, you would need at least 85 Postfix servers to send the same volume.

At these volumes, you are going to absolutely destroy your normal DNS server, so it is imperative to set up a local caching nameserver. I used bind-9 in the testing above, and it is dead simple to install.

Disk IOPS is also going to be a critical factor in performance tuning. Initial testing with 120 IOPS storage limited processing to under 3MMH regardless of the instance size. For this testing, I upgraded that to 7200 IOPS storage. I found that going up to the superfast 256k IOPS storage really did not buy much performance boost, and the reality is that you are probably going to hit a network limit before you hit the disk IOPS limit. Sending to dev/null got me a speed of 131 MMH, but that is too fast for even a 25Gbps NIC.

While we are talking about disk storage, it is important to discuss spool types. KumoMTA includes two different storage type options. "LocalDisk" writes each message to a file in a traditional way, so if you install it on "spinning disk" storage that has relatively low IOPS, performance will suffer. "RocksDB" uses memory and intelligent storage to achieve a similar performance gain to using a deferred_spool setting, but greatly reducing the risk of spooling from RAM. This testing was all done with a RocksDB configuration.

It is also important to tweak your sysctl settings in any Linux server to maximize resource usage. Out-of-the-box, Linux servers are configured for general-purpose use. If you are tweaking for maximum performance, you will need to ensure you are reusing Time-Wait connections and setting to the max available memory at the very least. This is how I configured for this testing, but EVERY SYSTEM IS DIFFERENT, so be sure to use your own environment and calculate appropriate values.

vm.max_map_count = 768000

net.core.rmem_default = 32768

net.core.wmem_default = 32768

net.core.rmem_max = 262144

net.core.wmem_max = 262144

fs.file-max = 250000

net.ipv4.ip_local_port_range = 5000 63000

net.ipv4.tcp_tw_reuse = 1

kernel.shmmax = 68719476736

net.core.somaxconn = 1024

kernel.shmmni = 4096

But that is a LOT of Email

Ok, so not everyone needs to send a billion messages a day, or even a million. What if your needs are more in the 1 million a MONTH range? That profile actually covers the vast majority of email senders worldwide, so of course, we tested for that too. If you are replacing Postfix or want to move off your Cloud SaaS email service, KumoMTA can be a very cost-effective solution, and it is easy to set up and run. If your monthly outbound volume is closer to 1 Million messages, a single AWS a1.xlarge instance can handle that load with plenty of room for expansion and should cost less than $100/month. Compare that to some SaaS email provider costs, and it may be worth the effort.

What now?

Clone and configure KumoMTA, then let us know what performance you can get from it. We would love to see someone beat these numbers. Follow us on LinkedIn for more updates, and bookmark the website to make sure you always have the latest news.