Over the years the MTA landscape has grown more sophisticated. In the beginning, Open-Source MTAs like Postfix and Sendmail were built around a single-tenant idiom: the server was configured by the sending organization, for the use of the sending organization; the server would have a single set of queues and operate over a single IP address, using a single process architecture.

As commercial and large-scale email grew, senders needed to configure servers not only for their own traffic but also for their users. At first, this meant finding ways to make an Open-Source MTA fit into their expanded needs.

While this worked, commercial MTA offerings offered higher performance and multi-tenancy, but while they introduced the virtual server concept, they did not truly account for tenants.

In this entry, I'll show you how the Native Tenant Awareness of KumoMTA enables a much better experience for administrators and deliverability engineers.

Multi-Tenancy is a Game Changer

When I was a Sales Engineer for a commercial MTA vendor, we once visited a client that had a full rack of 1U email servers all running Postfix, and a unique management system that they were rightly proud of: When a customer queued a mailing, the management system would identify an idle Postfix server, shut it down, change the IP address and domain configuration to that of the customer, then start the Postfix server back up and begin injecting mail.

This approach enabled them to handle up to 42 customers simultaneously, each on their own IP address and each with isolated queues. One challenge was that while the initial attempts for a mailing happen relatively quickly, any deferrals tie up the server and keep it from being used by the next customer. They handled this by configuring short retry windows and limiting a message's time in the queues.

When we introduced the commercial MTA, things really changed; because the MTA supported multiple IPs and separate queueing, suddenly those customers could all send simultaneously. In addition, because the MTA was based on a scheduler model, sending throughput was much higher than Postfix, which meant that the performance of those 42 servers was matched by a single MTA, with a second for redundancy.

The multi-tenancy capabilities of the commercial MTA saved them a fortune in not only server resources and the associated hosting costs but in time and complexity because they no longer needed the management system for ensuring consistent configuration of all the servers and the start/stop orchestration.

Virtual Servers are Only Partially Multi-Tenant

In the intervening years there have appeared a number of commercial MTA solutions that offer multi-tenancy by way of the "Virtual Server" or "Virtual MTA" concept, and it's known by a number of names including Binding, VMTA, Account, and Transport. While the implementation details vary, typically these constructs allow the admin to configure multiple sending IP addresses, group them, and assign messages to them (or to a pool, which assigns them to an individual member).

When a multi-tenant sender uses dedicated IPs, this virtual server (or the pool of them) represents the tenant (or customer), and the virtual server (or pool) ID can be used when reading logs to correlate back to the customer in question. The sender has isolated traffic, so any deliverability issues encountered can be limited to one tenant, and a traffic suspension to resolve any issues will only impact that customer.

If that customer has reason to use multiple pools (say one that has a pool for their bulk sends, and another for their transactional traffic), the naming scheme of the two pools can still be set up in a predictable fashion to ensure the data is still properly assigned when reading logs, and if the customer needs to be suspended there can be automation to suspend all the relevant customer IPs or pools.

While this works for a sender with only dedicated IP customers, it's fairly common in the Email Service Provider (ESP) space to have shared IP pools. Often new customers are assigned to a pool of mixed reputation and get assigned to progressively higher reputation pools as their data proves them out as a reputable sender.

Often these shared pool customers will stay in a shared pool for a very long time if the individual customer does not send sufficient volumes of mail to justify the use of dedicated IPs, as the mailbox providers often won't track reputation metrics for sources that don't send sufficient volume, leaving the sender with a null IP reputation.

This is where the virtual server concept fails in a multi-tenant environment; at its core, a virtual server is just that: a collection of settings and queues that act as a virtual MTA. The virtual server revolves around an IP address, not a tenant, and when used to represent a shared resource, all concept of a tenant is lost.

When an ESP processes logs in this kind of environment, they have to rely on additional data points to distinguish customers, including X-headers or VERP strings. If such a customer needs to be suspended, it's difficult to isolate their traffic out of the pool (if it's even possible), and any throttling of individual tenants has to be done on the injecting side instead of within the MTA.

KumoMTA Brings a Native Tenant Implementation

When we were designing KumoMTA, the lack of true multi-tenancy is one of the shortcomings of existing solutions that we knew we had to address. We wanted to de-couple Tenants and IPs, so that while one may be associated with the other, there's no ambiguity on whether a virtual server represents a single tenant or a shared resource.

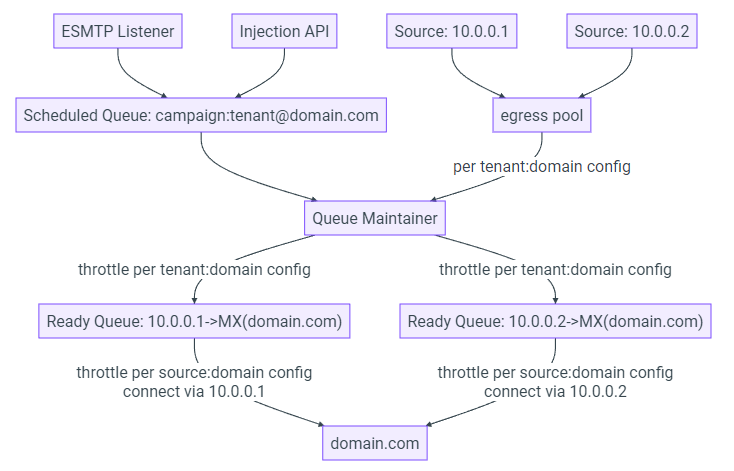

A picture is worth a thousand words, so here's what the queue flow looks like inside of KumoMTA:

This diagram is from The Configuration Concepts section of our User Guide.

When a message is injected into KumoMTA, it is loaded into a Scheduled Queue that stores all messages that are not being actively delivered. This queue can include both newly injected messages as well as messages that are awaiting a retry attempt.

The Scheduled Queue is structured with a queue for each combination of tenant, campaign, and destination domain, meaning that no two tenants share the same queue, even if they are both queueing for the same destination, and no two messages within a single tenant share the same queue unless those messages are part of the same campaign and heading to the same destination. This way any impact of queue backups is minimized and no given tenant or campaign is waiting behind any other tenant or campaign.

All three granularities are incorporated into all log events (including when those events are delivered as webhooks or over AMQP), and when messages need to be purged or suspended, those granularities are available to the operator (and automation) to ensure that only the messages that need to be managed are affected.

Administrators have complete flexibility in how their IPs are allocated and pooled, and how messages from tenants are assigned to those pools, from something as simple as a dedicated approach where the tenant name matches the pool name and a simple assignment occurs, to lookups against data sources that store the correlation between any given tenant or campaign and a given pool.

Summary

With KumoMTA there's none of the ambiguity traditionally present in multi-tenant MTA solutions. Instead of a virtual server concept that could represent a tenant, but might simply represent an IP, KumoMTA makes the tenant part of the queuing architecture, resulting in less queue collision, better isolation of tenants for throttling, suppression, and purging, and richer data in logs and webhooks.

Install KumoMTA today and see how it can help make the management of your tenants and campaigns much simpler and more straightforward.